It has taken decades for computers to be accepted by broadcast engineers and master control room staff. In this paper we will take a look back and see how this acceptance came about and why it took so long.

This document is in two parts. In the first, we look at operations in master control rooms in the 80s, at the advent of automation systems, and at the two distinct approaches to the early development of video servers. In the second, we describe what will be the standard configuration in master control rooms over the coming decades, with computers fully accepted as reliable video sources.

The author of this paper has first-hand experience as co-founder of Vector 3, one of the most successful automation companies in the industry. He, his partner and their colleagues gained their expertise by providing computer-based solutions for the industry throughout the entire historical period of this document. As a result, his company’s broadcast solutions have been thoroughly tested, tweaked and optimised for smooth and error free operation, and provide a complete and robust automation system.

The Hand-On-Tape Era

The advent of tape was a great technological advance: prior to its invention master control rooms could only operate live. However, the wide plastic open reels of the 50s were unwieldy and editing was painstaking, manual work.

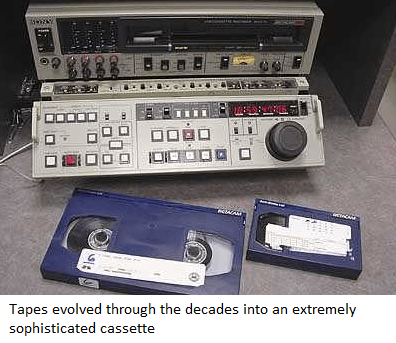

Tapes evolved through the decades into extremely sophisticated cassettes, so the old manual cutting and editing equipment used for open-reel tapes could happily be relegated to the museum. To cut a long story short, by the early 1980s the video tape had been king of television facilities for 30 years.

The operations and procedures in master control rooms in those days looked something like submarine warfare. Several operators sat at a long table facing a stack of monitors, their fingers on panels covered with buttons of all shapes, sizes and colours, performing their routine with brisk and concise verbal exchanges.

In the gallery, as it was called by its inhabitants, the day’s work was dictated by the flow of tapes. In the early morning, a junior with a cart would arrive from the archive department. The morning shift supervisor went through the procedure with him. The junior carried two lists: tapes arriving for the MCR (those in the cart) and tapes to be returned to the archive. The cart was emptied, ticking off tapes on the first list. The second part of the procedure consisted of filling the cart with the tapes that were not needed in the MCR and ticking the second list. When the procedure was complete, everybody signed and they went their separate ways.

The supervisor then checked the playlist printout and made sure that all the tapes required for that day were either in his own mini archive or had just been delivered via the cart procedure. With millions of people watching the very thing his finger was triggering, the supervisor could receive calls from his superiors if things went wrong, or, if they went really wrong, from a very senior one.

The supervisor would check if it was possible to compile the content of multiple tapes onto a single tape (these consecutive events could then be triggered as a single block). Supervisors were always asking for more VTRs, since the more they had, the more comfortably and safely their teams could work. If more VTRs were not provided, supervisors would create compiled commercial blocks. These resulted in a certain loss of quality, since back then copies were not error free, as they are in the Digital Age.

The moments before a long non-compiled commercial block in prime time were exhilarating. The supervisor, looking like Captain James T. Kirk, stood behind the operators as they gazed intently at the stack of monitors, while a tense junior waited in the rack room with his hand on a pile of tapes, anxiously reciting Alan Shepard’s prayer. As the millions in the audience listened to the anchor’s cheery “back-in-a-minute”, the gallery supervisor barked battle commands, secretly cursing slower editors who seemed oblivious to the urgency of the control room.

Hours later, in an intimate huddle prior to the graveyard shift, while the audience happily slept after a pleasant evening in front of the box, incoming and outgoing supervisors would discuss the plan for the small hours, when the broadcast would be peaceful enough to free up some VTRs to check tapes for the archives staff. One more day had passed, and another playlist sheet went to the bin with every line crossed off.

How things were in the good old days…

A junior goes into the rack room and loads some tapes into a number of VTRs following hand-written instructions from his shift supervisor. Once the cassettes are loaded and the VTRs are in operational mode, the VTR operator reviews their output in the monitor stack. Each monitor displays a time clock superimposed on the video — a relatively new advance at the time. Once he is satisfied, the operator disengages the heads so the tape won’t get damaged while in standby, and gives the all-clear to the supervisor.

All the staff wait and watch the red LEDs of the station’s clock, which is linked by RF to the Atomic Clock in Frankfurt, where a handful of atoms mark time for the whole European continent.

Someone calls out the countdown, perhaps the mixer operator, with his hand waiting on the lever, or maybe the supervisor himself, if the situation is critical enough: “Ten minutes, five minutes, two minutes, one minute, 45 seconds, 30 seconds, 15 seconds, 10, 5, 4 …”. The VTR operator engages the heads and pushes play. The image blinks in the second most central monitor in the stack (PVW) and the mixer operator gets ready to abort in case the tape jams. But the image stabilizes and he shouts “top!” or “in” or “air!” or whatever the standard expression is in that master control room. The audio man in his booth checks and carefully adjusts some mysterious-looking knobs.

One more successful transition. One more on-air event, marked off on the playlist sheet.

Robots and promises

The 24/7 on-air operation of closed tape playout in the master control room required so many highly skilled people that, once closed cassette technology became stable, it seemed clear that some kind of industrial automation was required. The computer industry made some attempts at answering this need, but their solutions were still reliant on enormous open tape systems for long-term archiving. In the computer industry, closed cassettes were used for very small-scale operations, so there were no closed reel cart machines available that could be adapted for broadcast.

In the 1980s the Sony Corporation launched one of the most inspired devices in the history of TV technology. It was called the Library Management System (LMS), and it became a symbol of status amongst stations. Those who owned an LMS belonged to the elite group of world-class broadcasters.

An LMS was basically a very large cabinet with an internal corridor and shelves for tapes on either side. Instead of human juniors loading tapes, two big robotic arms performed the task. On one side, an enclosure the size of a telephone box housed a stack of VTRs. LMSs came in different sizes, but five VTRs for 1000 tapes was average.

It was amazing to see the arms at work, but even more so when you understood what they were doing. Just like the human shift supervisors, the LMS operating system was working out ways to compile events on tape in advance. Sony’s Digital Betacam VTR could create copies whose quality was, for the first time, identical to the original, enabling blocks to be compiled with no loss of quality. The LMS software performed incredible calculations in advance, like a chess player.

The LMS was the first device in which the tape was no longer the main element. Identical sequences, termed “media” and identifiable with the same “media ID”, were recorded on different tapes. Multiple copies of each media file existed, and the LMS software vastly increased playout efficiency by copying, compiling and playing out optimised blocks.

But the all-in-one approach was not without issues. A gallery boasting an LMS tended to be a slave to it because the playlist fed into the machine became the de facto “master playlist.” Live events were considered to be small islands and it was unclear if staff were controlling the LMS during commercial blocks or the other way round. Operators were equipped with a GPI button to tell the LMS when to resume automated playout once the humans had finished their brief episode of live programming.

The question of who was in charge produced many operational conundrums, since the flexibility to change events at the last minute disappeared and the need for systematic workflows was imposed on people whose creativity had come at the price of legendary delays in delivering the goods. Putting new tapes inside an LMS was not easy, as it was occupied with its own logical processes, and persuading its software to operate at the limit of its capacity was a challenge in itself.

At the same time that playout was becoming increasingly automated through the use of cart machines, the scheduling process was also computerized. This resulted in many problems initially, as early programmers did not understand in detail the workings of a master control room. To start with, many systems did not calculate in frames but in seconds. This probably did not seem to be a problem for computer geeks, but for gallery supervisors it was a nightmare. Programs were edited by rounding times to a half second, the cumulative result of which was that time schedules could be out by 10 or more seconds after several hours of playout. Many stations had a process called “traffic” which, among other things, adjusted a playlist given in round seconds to frames. Computer-generated playlists represented master control room staff’s first contact with computers and for gallery supervisors in particular, it was not a pleasant experience.

With all its marvellous technology and its super-high-quality performance, the LMS was extremely expensive. The complexity of its setup and its arcane configuration settings meant the price of ownership was too high for even those TV stations used to paying three shifts of eight well-paid staff.

Odetix launched a much simpler device. Sony answered with Flexicart, Panasonic with SmartCart and Thomson with Procart. These new-generation cart machines were much smaller and the idea was to feed them daily with the tapes required. Two approaches were proposed to handle on-air management. The traditional model called for the cart machines to operate with their own router controlled remotely by its own software, as the LMS used to do. They would deliver a signal which the supervisor had no control over (just like when the MCR passed the signal to a live studio). With the second approach, the robots were used as telemanipulators. The VTR operator could control each VTR and the robot arm remotely from his table, enabling him to select one and deliver a signal to the master control room mixer. If commercial blocks needed to be compiled, this would be managed manually by control room staff.

Shift supervisors strongly favoured the second option, which gave them back control. But a third, even more alarming configuration was eventually to succeed. Engineering departments proposed that computers control not only the VTR router but also the MCR’s main mixer. They argued that a computer could position the VTRs, do the pre-roll, control the mixer and trigger events with greater precision than the human hand.

In practice, however, this was more complex than the visionaries had foreseen. A whole generation of operators saw how clumsy computers created havoc. Certain cart machines became famous for their stupidity and a “polar bear in the zoo” type syndrome was common, in which they performed the same action repeatedly, such as putting the tape in the VTR and taking it out again, over and over, until the supervisor finally unplugged and rebooted the machine. Viewers at home would see sudden rewinds, not realizing that this was an unfortunate side effect of early attempts at automation.

But as algorithms and software quality rapidly improved, time accuracy went back to where it had been for decades: somewhere between zero and one frame, and automated master control rooms became the norm. A new generation of software programmers familiar with gallery operations did most of the work and broadcast engineers superseded computer engineers in the planning of master control room automation.

Distrustful gallery teams would generally make and keep a record of the true “SOM” (start of message: the point on a tape when a programme started) which they typically did not share with the rest of the facility. Computing islands were created to separate the MCR from the chaos of the rest of the TV station.

Life could have continued in this way but the computer industry had made a new promise to station owners: video could be converted into a computer file and played out as easily as a printer prints a playlist. Station managers were eager to try it and excited by the possibility of no longer needing the expensive VTR head cleaning procedures required for video cassettes.

So by the mid 90s the era of the tape seemed to be over. Shift supervisors, who had first been annoyed by the lack of frames in the computer-generated playlists of the 70s, then shocked by the inflexibility of the first generation of automation software in the 80s, were to face new and greater horrors with the next step in the revolution. It would take another 10 years to complete and, perversely, the richest stations would be the ones to suffer most.

Standard behemoths vs. dedicated dwarfs

Now, with the revolution complete, it is difficult to remember the excitement of the broadcast market in the mid 90s. The coalescence of computers and video exceeded all expectations once the original technology (of selecting the colour of each point on the monitor by changing values in an associated memory buffer) was backed up by low-level software which useful applications could be built upon.

Each IBC presented the visitor with more than just a selection of new products; it now offered entirely new markets to broadcasters. As usually happens during periods of rapid technological development, anything seemed possible and companies had difficulties deciding which of their teams’ inventions to push as their flagship product.

Within a short time the big names in the computer industry, who were already providing services to the big names in the broadcast industry, saw a new niche to be exploited: the master control room.

Broadcast corporations’ systems departments were at this time busy computerizing the archiving of tapes and the workflow including the creation of playlists, automated commercial blocks and rights management.

The idea was that once you had sampled the video frame by frame, it became “a set of 1s and 0s” that you could store and manage as a computer file. The maths was explained on paper napkins to many new to the technology: 576 lines x 720 columns made a total of nearly half a million points or “pixels”.

Each “pixel” must contain sufficient information to specify a colour and, as computer techies explained to each other over and over again, the human eye has a very fine perception of colour: we can distinguish several million different colours. The first power of two (1s and 0s) that allows sufficient combinations to do this is 8 (28 = 256). 256 to the power of 3 (the 3 colours emitted by the tubes) gives more than 16 million colours, going beyond the human capacity to differentiate.

The final maths involves multiplying the half million points by this information about the 3 colours to find out how big a single frame is, and then multiplying the result by 25 frames per second to find the size on disk of one second of broadcast quality video.

It was much later discovered that the need for bytes was not so large and that there were multiple workarounds that would save space. At the time, however, the mirage of huge files ushered in the era of the IT behemoths. Microcomputing had been eroding the market for minicomputers and the broadcast sector looked very promising — a sector in which only really large-scale bit-crunchers could compete. Replacing an LMS with a large computer was profitable and did not seem to be too difficult.

Over the years, the main computer manufacturers offered bigger and bigger monsters with immense storage capacities and bandwidths. The idea was that all video would flow as files inside the station. Because transfer rates were so slow, the obvious solution was to create a central storage repository where all the video files could be accessed from workstations for editing or playout.

From today’s perspective, these mammoth systems resemble those oddly-shaped planes seen crashing in documentaries about the beginnings of human flight. But at the time they seemed like real, viable solutions. Some of them were truly sophisticated and, once the main problems were understood (that computer bit rates are not constant, but rather rise and fall, while video requires a constant sustained bit rate) the products started to evolve.

This evolution did not last long. Once the magnitude of the redesign work required to guarantee a “sustained bit rate” was understood, development work stopped, and most of the products of this kind were discontinued. The broadcast industry’s technical requirements demanded a research investment that the potential sales could not justify. If standard IT technologies available at the time did not work (and they certainly did not), the market was not profitable. So, one by one, the computer manufacturers pulled out.

While computer manufacturers, working closely with the system departments of broadcast corporations, were coming to realize how complex this market was, traditional video manufacturers were following another path. They knew from the beginning that sustained bit rates were needed and that disk controllers were the key. They successfully developed disk controllers and managed to create systems capable of recording and playing, with small buffers to achieve frame-accurate output.

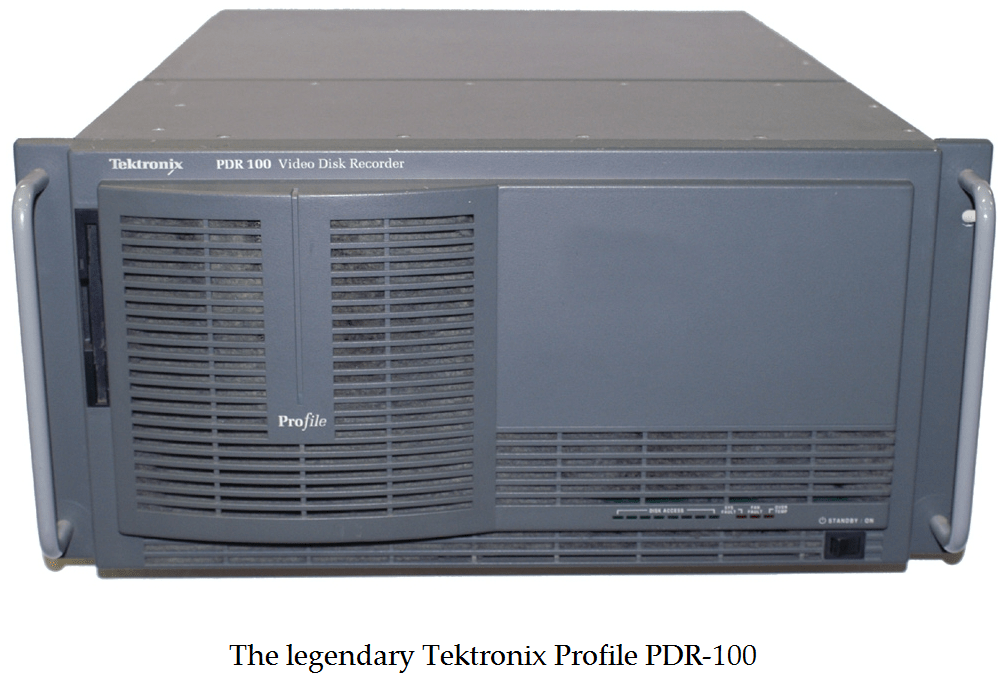

Being traditional video companies, they did not have the resources to develop a system capable of playing more than two or three channels or with a large amount of storage. In addition, because the technology was developed for this sole purpose, it was incompatible with any other computer technology. These products, and particularly the most successful of them, Tektronix Profile, were reliable and were aimed at a more humble target than the products of their computer manufacturer counterparts. Instead of computerizing the whole workflow of the station, they only aimed to replace VTRs and only those used specifically for playout.

The commercial teams of big blue chip computer companies had had their fingers burnt by a number of painful, expensive fiascos, and as a result the “dwarfs” (and what became known as ”videoservers”) came to dominate the market and take over the rack rooms.

The initial key to the success of these systems lay in the simplicity of their design, but in the long run this spelt their demise. As non-linear or computer editing became more popular, it was clear that returning to “base band” before loading in the videoserver was not a good idea. So over the years, videoserver manufacturers tried to connect them to NLEs and other videoservers in order to create larger systems. However, this was not simple, and the combination of cart machine and video server in cache mode remained the most popular amongst broadcast engineers.

One of the technologies that contributed to the success of these proprietary dwarfs was video compression, which reduced the need for storage and bandwidth, deemed necessary in the early days of video computing, to a manageable fraction. But the downside was that compression brought back the problem of copy degradation that digital video had removed from tape technology. Copying video from one disk to another by decompressing to SDI and then recompressing resulted in a reduction in quality known as concatenation. In an IT environment files could be copied directly with no compression and no loss of quality.

Market demand for compatibility began to increase. There were two main obstacles to the advance of standards that would have allowed the interchange of files without concatenation. Firstly, there were the inherent technical barriers: non-standard hardware video servers used specially developed operating systems and invented-on-the-fly controllers, so building components such as SMB-compatible drivers from scratch was not simple. Most of the manufacturers resorted to FTP emulators that made access to files possible but slow and rudimentary.

The second obstacle was commercial. Industry committees held ongoing meetings that went nowhere because the big names did not really want standards. Each dreamed of a broadcast market entirely dominated by their own brand. Years of discussion led only to the concept of the “wrapper”, and after close to another decade a semi-common wrapper was agreed upon: the MXF. Broadcast engineers did not push strongly for integration, since they were familiar with concatenation, which was similar to the copy degradation of the analogue era, and by that time they had developed a certain phobia of multi-brand integration based on pseudo-standards that nobody could enforce.

Nowadays just a few of these systems still exist, and the pressure for them to become computers has become irresistible. Exchanging files with third parties has become as important as playing or recording them. The revolution is over, and an IT-based master control room with broadcast quality and broadcast reliability is not only possible but has become the norm. And just as the jet planes of today are very similar to those of the 60s, the current look of IT-based multichannel master control rooms will probably survive as long as the broadcast industry itself.